Resource-rational account of sequential effects in human prediction

Abstract

An abundant literature reports on ‘sequential effects’ observed when humans make predictions on the basis of stochastic sequences of stimuli. Such sequential effects represent departures from an optimal, Bayesian process. A prominent explanation posits that humans are adapted to changing environments, and erroneously assume non-stationarity of the environment, even if the latter is static. As a result, their predictions fluctuate over time. We propose a different explanation in which sub-optimal and fluctuating predictions result from cognitive constraints (or costs), under which humans however behave rationally. We devise a framework of costly inference, in which we develop two classes of models that differ by the nature of the constraints at play: in one case the precision of beliefs comes at a cost, resulting in an exponential forgetting of past observations, while in the other beliefs with high predictive power are favored. To compare model predictions to human behavior, we carry out a prediction task that uses binary random stimuli, with probabilities ranging from 0.05 to 0.95. Although in this task the environment is static and the Bayesian belief converges, subjects’ predictions fluctuate and are biased toward the recent stimulus history. Both classes of models capture this ‘attractive effect’, but they depart in their characterization of higher-order effects. Only the precision-cost model reproduces a ‘repulsive effect’, observed in the data, in which predictions are biased away from stimuli presented in more distant trials. Our experimental results reveal systematic modulations in sequential effects, which our theoretical approach accounts for in terms of rationality under cognitive constraints.

Editor's evaluation

This valuable work addresses a long-standing empirical puzzle from a new computational perspective. The authors provide convincing evidence that attractive and repulsive sequential effects in perceptual decisions may emerge from rational choices under cognitive resource constraints rather than adjustments to changing environments. It is relevant to understanding how people represent uncertain events in the world around them and make decisions, with broad applications to economic behavior.

https://doi.org/10.7554/eLife.81256.sa0Introduction

In many situations of uncertainty, some outcomes are more probable than others. Knowing the probability distributions of the possible outcomes provides an edge that can be leveraged to improve and speed up decision making and perception (Summerfield and de Lange, 2014). In the case of choice reaction-time tasks, it was noted in the early 1950s that human reactions were faster when responding to a stimulus whose probability was higher (Hick, 1952; Hyman, 1953). In addition, faster responses were obtained after a repetition of a stimulus (i.e., when the same stimulus was presented twice in a row), even in the case of serially-independent stimuli (i.e., when the preceding stimulus carried no information on subsequent ones; Hyman, 1953; Bertelson, 1965). The observation of this seemingly suboptimal behavior has motivated in the following decades a profuse literature on ‘sequential effects’, i.e., on the dependence of reaction times on the recent history of presented stimuli (Kornblum, 1967; Soetens et al., 1985; Cho et al., 2002; Yu and Cohen, 2008; Wilder et al., 2009; Jones et al., 2013; Zhang et al., 2014; Meyniel et al., 2016). These studies consistently report a recency effect whereby the more often a simple pattern of stimuli (e.g. a repetition) is observed in recent stimulus history, the faster subjects respond to it. In tasks in which subjects are asked to make predictions about sequences of random binary events, sequential effects are also observed and they have given rise since the 1950s to a rich literature (Jarvik, 1951; Edwards, 1961; McClelland and Hackenberg, 1978; Matthews and Sanders, 1984; Gilovich et al., 1985; Ayton and Fischer, 2004; Burns and Corpus, 2004; Croson and Sundali, 2005; Bar-Eli et al., 2006; Oskarsson et al., 2009; Plonsky et al., 2015; Plonsky and Erev, 2017; Gökaydin and Ejova, 2017).

Sequential effects are intriguing: why do subjects change their behavior as a function of the recent past observations when those are in fact irrelevant to the current decision? A common theoretical account is that humans infer the statistics of the stimuli presented to them, but because they usually live in environments that change over time, they may believe that the process generating the stimuli is subject to random changes even when it is in fact constant (Yu and Cohen, 2008; Wilder et al., 2009; Zhang et al., 2014; Meyniel et al., 2016). Consequently, they may rely excessively on the most recent stimuli to predict the next ones. In several studies, this was heuristically modeled as a ‘leaky integration’ of the stimuli, that is, an exponential discounting of past observations (Cho et al., 2002; Yu and Cohen, 2008; Wilder et al., 2009; Jones et al., 2013; Meyniel et al., 2016). Here, instead of positing that subjects hold an incorrect belief on the dynamics of the environment and do not learn that it is stationary, we propose a different account, whereby a cognitive constraint is hindering the inference process and preventing it from converging to the correct, constant belief about the unchanging statistics of the environment. This proposal calls for the investigation of the kinds of choice patterns and sequential effects that would result from different cognitive constraints at play during inference.

We derive a framework of constrained inference, in which a cost hinders the representation of belief distributions (posteriors). This approach is in line with a rich literature that views several perceptual and cognitive processes as resulting from a constrained optimization: the brain is assumed to operate optimally, but within some posited limits on its resources or abilities. The ‘efficient coding’ hypothesis in neuroscience (Ganguli and Simoncelli, 2016; Wei and Stocker, 2015; Wei and Stocker, 2017; Prat-Carrabin and Woodford, 2021c) and the ‘rational inattention’ models in economics (Sims, 2003; Woodford, 2009; Caplin et al., 2019; Gabaix, 2017; Azeredo da Silveira and Woodford, 2019; Azeredo da Silveira et al., 2020) are examples of this approach, which has been called ‘resource-rational analysis’ (Griffiths et al., 2015; Lieder and Griffiths, 2019). Here, we investigate the proposal that human inference is resource-rational, i.e., optimal under a cost. As for the nature of this cost, we consider two natural hypotheses: first, that a higher precision in belief is harder for subjects to achieve, and thus that more precise posteriors come with higher costs; and second, that unpredictable environments are difficult for subjects to represent, and thus that they entail higher costs. Under the first hypothesis, the cost is a function of the belief held, while under the second hypothesis the cost is a function of the inferred environment. We show that the precision cost predicts ‘leaky integration’: in the resulting inference process, remote observations are discarded. Crucially, beliefs do not converge but fluctuate instead with the recent stimulus history. By contrast, under the unpredictability cost, the inference process does converge, although not to the correct (Bayesian) posterior, but rather to a posterior that implies a biased belief on the temporal structure of the stimuli. In both cases, sequential effects emerge as the result of a constrained inference process.

We examine experimentally the degree to which the models derived from our framework account for human behavior, with a task in which we repeatedly ask subjects to predict the upcoming stimulus in sequences of Bernoulli-distributed stimuli. Most studies on sequential effects only consider the equiprobable case, in which the two stimuli have the same probability. However, the models we consider here are more general than this singular case and they apply to the entire range of stimulus probability. We thus manipulate in separate blocks of trials the stimulus generative probability (i.e., the Bernoulli probability that parameterizes the stimulus) to span the range from 0.05 to 0.95 by increments of 0.05. This enables us to examine in detail the behavior of subjects in a large gamut of environments from the singular case of an equiprobable, maximally-uncertain environment (with a probability of 0.5 for both stimuli) to the strongly-biased, almost-certain environment in which one stimulus occurs with probability 0.95.

To anticipate on our results, the predictions of subjects depend on the stimulus generative probability, but also on the history of stimuli. We examine whether the occurrence of a stimulus, in past trials, increase the proportion of predictions identical to this stimulus (‘attractive effect’), or whether it decreases this proportion (‘repulsive effect’). The two costs presented above reproduce qualitatively the main patterns in subjects’ data, but they make distinct predictions as to the modulations of the recency effect as a function of the history of stimuli, beyond the last stimulus. We show that the responses of subjects exhibit an elaborate, and at times counter-intuitive, pattern of attractive and repulsive effects, and we compare these to the predictions of our models. Our results suggest that the brain infers a stimulus generative probability, but under a constraint on the precision of its internal representations; the inferred generative process may be more general than the actual one, and include higher-order statistics (e.g. transition probabilities), in contrast with the Bernoulli-distributed stimulus used in the experiment.

We present the behavioral task and we examine the predictions of subjects — in particular, how they vary with the stimulus generative probability, and how they depend, at each trial, on the preceding stimulus. We then introduce our framework of inference under constraint, and the two costs we consider, from which we derive two families of models. We examine the behavior of these models and the extent to which they capture the behavioral patterns of subjects. The models make different qualitative predictions about the sequential effects of past observations, which we confront to subjects’ data. We find that the predictions of subjects are qualitatively consistent with a model of inference of conditional probabilities, in which precise posteriors are costly.

Results

Subjects’ predictions of a stimulus increase with the stimulus probability

In a computer-based task, subjects are asked to predict which of two rods the lightning will strike. On each trial, the subject first selects by a key press the left- or right-hand-side rod presented on screen. A lightning symbol (which is here the stimulus) then randomly strikes either of the two rods. The trial is a success if the lightning strikes the rod selected by the subject (Figure 1a). The location of the lightning strike (left or right) is a Bernoulli random variable whose parameter (the stimulus generative probability) we manipulate across blocks of 200 trials: in each block, is a multiple of 0.05 chosen between 0.05 and 0.95. Changes of block are explicitly signaled to the subjects: each block is presented as a different town exposed to lightning strikes. The subjects are not told that the locations of the strikes are Bernoulli-distributed (in fact no information is given to them regarding how the locations are determined). Moreover, in order to capture the ‘stationary’ behavior of subjects, which presumably prevails after ample exposure to the stimulus, each block is preceded by 200 passive trials in which the stimuli (sampled with the probability chosen for the block) are successively shown with no action from the subject (Figure 1b); this is presented as a ‘useful track record’ of lightning strikes in the current town. (To verify the stationarity of subjects’ behavior, we compare their responses in the first and second halves of the 200 trials in which they are asked to make predictions. In most cases we find no significant differences. See Appendix.) We provide further details on the task in Methods.

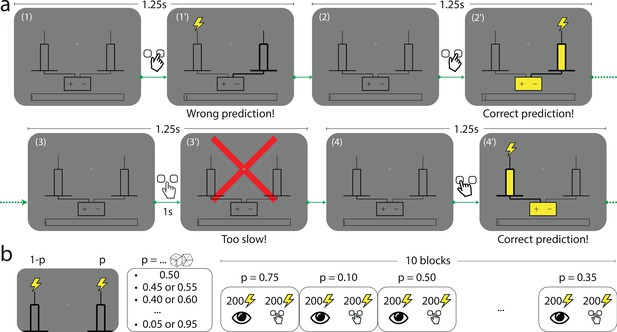

The Bernoulli sequential prediction task.

(a) In each successive trial, the subject is asked to predict which of two rods the lightning will strike. (1) A trial begins. (1’) The subject chooses the right-hand-side rod (bold lines), but the lightning strikes the left one (this feedback is given immediately after the subject makes a choice). (2) 1.25 s after the beginning of the preceding trial, a new trial begins. (2’) The subject chooses the right-hand-side rod, and this time the lightning strikes the rod chosen by the subject (immediate feedback). The rod and the connected battery light up (yellow), indicating success. (3) A new trial begins. (3’) If after 1 s the subject has not made a prediction, a red cross bars the screen and the trial ends. (4) A new trial begins. (4’) The subject chooses the left-hand-side rod, and the lightning strikes the same rod. In all cases, the duration of a trial is 1.25 s. (b) In each block of trials, the location of the lightning strike is a Bernoulli random variable with parameter , the stimulus generative probability. Each subject experiences 10 blocks of trials. The stimulus generative probability for each block is chosen randomly among the 19 multiples of 0.05 ranging from 0.05 to 0.95, with the constraint that if is chosen for a given block, neither nor can be chosen in the subsequent blocks; as a result for any value among these 19 probabilities spanning the range from 0.05 to 0.95, there is one block in which one of the two rods receives the lightning strike with probability . Within each block the first 200 trials consist in passive observation and the 200 following trials are active trials (depicted in panel a).

The behavior of subjects varies with the stimulus generative probability, . In our analyses, we are interested in how the subjects’ predictions of an event (left or right strike) vary with the probability of this event, regardless of its nature (left or right). Thus, for instance, we would like to pool together the trials in which a subject makes a rightward prediction when the probability of a rightward strike is 0.7, and the trials in which a subject makes a leftward prediction when the probability of a leftward strike is also 0.7. Therefore, throughout the paper, we do not discuss whether subjects predict ‘right’ or ‘left’, and instead we discuss whether they predict the event ‘A’ or the complementary event ‘B’: in different blocks of trials, A (and similarly B) may refer to different locations; but importantly, B always corresponds to the location opposite to A, and denotes the probability of A (thus B has probability ). This allows us, given a probability , to pool together the responses obtained in blocks of trials in which one of the two locations has probability . One advantage of this pooling is that it reduces the noise in data. Looking at the unpooled data, however, does not change our conclusions; see Appendix.

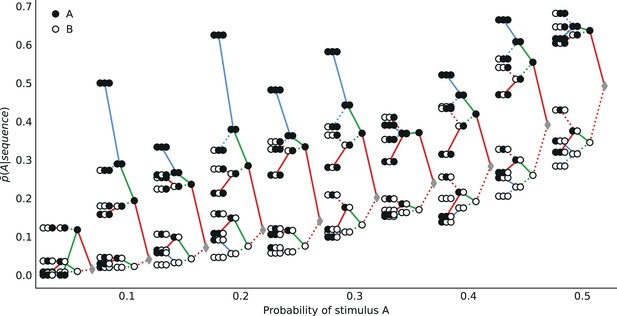

Turning to the behavior of subjects, we denote by the proportion of trials in which a subject predicts the event A. In the equiprobable condition (), the subjects predict either side on about half the trials (, subjects pooled; standard error of the mean (sem): 0.008; p-value of t-test of equality with 0.5: 0.59). In the non-equiprobable conditions, the optimal behavior is to predict A on none of the trials () if , or on all trials () if . The proportion of predictions A adopted by the subjects also increases as a function of the stimulus generative probability (Pearson correlation coefficient between and , subjects pooled: .97; p-value: 3.3e-6; correlation between the ‘logits’, : 0.994, p-value: 5.7e-9.), but not as steeply: it lies between the stimulus generative probability , and the optimal response 0 (if ) or 1 (if ; Figure 2a).

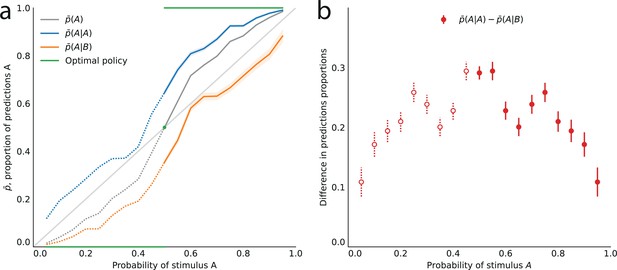

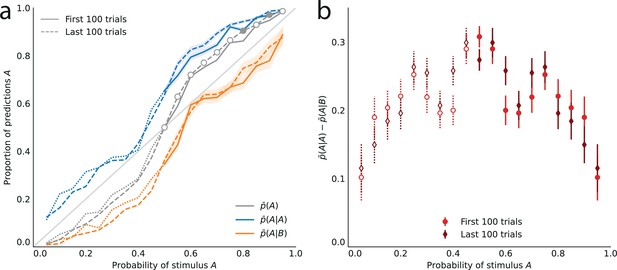

Across all stimulus generative probabilities, subjects are more likely than average to make a prediction equal to the preceding observation.

(a) Proportion of predictions A in subjects’ pooled responses as a function of the stimulus generative probability, conditional on observing an A (blue line) or a B (orange line) on the preceding trial, and unconditional (grey line). The widths of the shaded areas indicate the standard error of the mean (n = 178 to 3603). (b) Difference between the proportion of predictions A conditional on the preceding observation being an A, and the proportion of predictions A conditional on the preceding observation being a B. This difference is positive across all stimulus generative probabilities, that is, observing an A at the preceding trial increases the probability of predicting an A (p-values of Fisher’s exact tests, with Bonferroni-Holm-Šidák correction, are all below 1e-13). Bars are twice the square root of the sum of the two squared standard errors of the means (for each point, total n: 3582 to 3781). The binary nature of the task results in symmetries in this representation of data: in panel (a) the grey line is symmetric about the middle point and the blue and orange lines are reflections of each other, and in panel (b) the data is symmetric about the middle probability, 0.5. For this reason, for values of the stimulus generative probability below 0.5 we show the curves in panel (a) as dotted lines, and the data points in panel (b) as white dots with dotted error bars.

First-order sequential effects: attractive influence of the most recent stimulus on subjects’ predictions

The sequences presented to subjects correspond to independent, Bernoulli-distributed random events. Having shown that the subjects’ predictions follow (in a non-optimal fashion) the stimulus generative probability, we now test whether they also exhibit the non-independence of consecutive trials featured by the Bernoulli process. Under this hypothesis and in the stationary regime, the proportion of predictions A conditional on the preceding stimulus being A, , should be no different than the proportion of predictions A conditional on the preceding stimulus being B, . (Here and below, denotes the proportion of predictions X conditional on the preceding observation being Y, and not on the preceding response being Y. For the possibility that subjects’ responses depend on the preceding response, see Methods.)

In other words, conditioning on the preceding stimulus should have no effect. In subjects’ responses, however, these two conditional proportions are markedly different for all stimulus generative probabilities (Fisher exact test, subjects pooled: all p-values < 1e-10; Figure 2a). Both quantities increase as a function of the stimulus generative probability, but the proportions of predictions A conditional on an A are consistently greater than the proportions of predictions A conditional on a B, i.e., (Figure 2b). (We note that because the stimulus is either A or B, it follows that, symmetrically, the proportions of predictions B conditional on a B are consistently greater than the proportions of predictions B conditional on an A.) In other words, the preceding stimulus has an ‘attractive’ sequential effect. In addition, this attractive sequential effect seems stronger for values of the stimulus generative probability closer to the equiprobable case (p = 0.5), and to decrease for more extreme values ( closer to 0 or to 1; Figure 2b). The results in Figure 2 are obtained by pooling together the responses of the subjects. Results derived from an across-subjects analysis are very similar; see Appendix.

A framework of costly inference

The attractive effect of the preceding stimulus on subjects’ responses suggests that the subjects have not correctly inferred the Bernoulli statistics of the process generating the stimuli. We investigate the hypothesis that their ability to infer the underlying statistics of the stimuli is hampered by cognitive constraints. We assume that these constraints can be understood as a cost, bearing on the representation, by the brain, of the subject’s beliefs about the statistics. Specifically, we derive an array of models from a framework of inference under costly posteriors (Prat-Carrabin et al., 2021a), which we now present. We consider a model subject who is presented on each trial with a stimulus (where 0 and 1 encode for B and A, respectively) and who uses the sequence of stimuli to infer the stimulus statistics, over which she holds the belief distribution . A Bayesian observer equipped with this belief and observing a new observation would obtain its updated belief through Bayes’ rule. However, a cognitive cost hinders our model subject’s ability to represent probability distributions . Thus, she approximates the posterior through another distribution that minimizes a loss function defined as

where is a measure of distance between two probability distributions, and is a coefficient specifying the relative weight of the cost. (We are not proposing that subjects actively minimize this quantity, but rather that the brain’s inference process is an effective solution to this optimization problem.) Below, we use the Kullback-Leibler divergence for the distance (i.e. ). If , the solution to this minimization problem is the Bayesian posterior; if , the cost distorts the Bayesian solution in ways that depend on the form of the cost borne by the subject (we detail further below the two kinds of costs we investigate).

In our framework, the subject assumes that the preceding stimuli ( with ) and a vector of parameters jointly determine the distribution of the stimulus at trial , . Although in our task the stimuli are Bernoulli-distributed (thus they do not depend on preceding stimuli) and a single parameter determines the probability of the outcomes (the stimulus generative probability), the subject may admit the possibility that more complex mechanisms govern the statistics of the stimuli, for example transition probabilities between consecutive stimuli. Therefore, the vector may contain more than one parameter and the number of preceding stimuli assumed to influence the probability of the following stimulus, which we call the ‘Markov order’, may be greater than 0.

Below, we call ‘Bernoulli observer’ any model subject who assumes that the stimuli are Bernoulli-distributed (); in this case the vector consists of a single parameter that determines the probability of observing A, which we also denote by for the sake of concision. The bias and variability in the inference of the Bernoulli observer is studied in Prat-Carrabin et al., 2021a. We call ‘Markov observer’ any model subject who posits that the probability of the stimulus depends on the preceding stimuli (). In this case, the vector contains the conditional probabilities of observing A after observing each possible sequence of stimuli. For instance, with the vector is the pair of parameters denoting the probabilities of observing a stimulus A after observing, respectively, a stimulus A and a stimulus B. In the absence of a cost, the belief over the parameter(s) eventually converges towards the parameter vector that is consistent with the generative Bernoulli statistics governing the stimulus (except if the prior precludes this parameter vector). Below, we assume a uniform prior.

To understand how the costs contort the inference process, it is useful to have in mind the solution to the ‘unconstrained’ inference problem (with ), i.e., the Bayesian posterior, which we denote by . In the case of a Bernoulli observer (), after trials, the Bayesian posterior is a Beta distribution,

where is the number of stimuli observed up to trial , that is, , and . As more evidence is accumulated, the Bayesian posterior gradually narrows and converges towards the value of the stimulus generative probability (Figure 3c and d, grey lines).

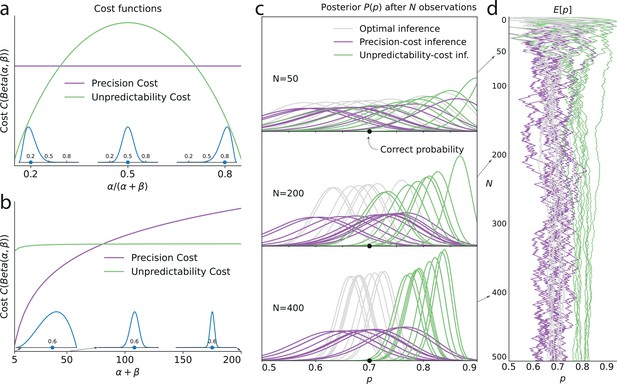

Illustration of the Bernoulli-observer models, with unpredictability and precision costs.

(a) Precision cost (purple) and unpredictability cost (green lines) of a Beta distribution with parameters and , as functions of the mean of the distribution, , and keeping the entropy constant. The precision cost is the negative of the entropy and it is thus constant, regardless of the mean of the distribution. The unpredictability cost is larger when the mean of the distribution is closer to 0.5 (i.e. when unpredictable environments are likely, under the distribution). Insets: Beta distributions with mean 0.2, 0.5, and 0.8, and constant entropy. (b) Costs as functions of the sample size parameter, . A larger sample size implies a higher precision and lower entropy, thus the precision cost increases as a function of the sample size, whereas the unpredictability cost is less sensitive to changes in this parameter. Insets: Beta distributions with mean 0.6 and sample size parameter, , equal to 5, 50, and 200. (c) Posteriors of an optimal observer (gray), a precision-cost observer (purple) and an unpredictability-cost observer (green lines), after the presentation of ten sequences of N = 50, 200, and 400 observations sampled from a Bernoulli distribution of parameter 0.7. The posteriors of the optimal observer narrow as evidence is accumulated, and the different posteriors obtained after different sequences of observations are drawn closer to each other and to the correct probability. The posteriors of the unpredictability-cost observer also narrow and group together, but around a probability larger (less unpredictable) than the correct probability. Precise distributions are costly to the precision-cost observer and thus the posteriors do not narrow after long sequences of observations. Instead, the posteriors fluctuate with the recent history of the stimuli. (d) Expected probability resulting from the inference. The optimal observer (gray) converges towards the correct probability; the unpredictability-cost observer (green) converges towards a biased (larger) probability; and the precision-cost observer (purple lines) does not converge, but instead fluctuates with the history of the stimuli.

The ways in which the Bayesian posterior is distorted, in our models, depend on the nature of the cost that weighs on the inference process. Although many assumptions could be made on the kind of constraint that hinders human inference, and on the cost it would entail in our framework, here we examine two costs that stem from two possible principles: that the cost is a function of the beliefs held by the subject, or that it is a function of the environment that the subject is inferring. We detail, below, these two costs.

Precision cost

A first hypothesis about the inference process of subjects is that the brain mobilizes resources to represent probability distributions, and that more ‘precise’ distributions require more resources. We write the cost associated with a distribution, , as the negative of its entropy,

which is a measure of the amount of certainty in the distribution. Wider (less concentrated) distributions provide less information about the probability parameter and are thus less costly than narrower (more concentrated) distributions (Figure 3b). As an extreme case, the uniform distribution is the least costly.

With this cost, the loss function (Equation 1) is minimized by the distribution equal to the product of the prior and the likelihood, raised to the exponent , and normalized, i.e.,

Since is strictly positive, the exponent is positive and lower than 1. As a result, the solution ‘flattens’ the Bayesian posterior, and in the extreme case of an unbounded cost () the posterior is the uniform distribution.

Furthermore, in the expression of our model subject’s posterior, the likelihood is raised after trials to the exponent , it thus decays to zero as the number of new stimuli increases. One can interpret this effect as gradually forgetting past observations. Specifically, we recover the predictions of leaky-integration models, in which remote patterns in the sequence of stimuli are discounted through an exponential filter (Yu and Cohen, 2008; Meyniel et al., 2016); here, we do not posit the gradual forgetting of remote observations, but instead we derive it as an optimal solution to a problem of constrained inference. We illustrate leaky integration in the case of a Bernoulli observer (): in this case, the posterior after trials, , is a Beta distribution,

where and are exponentially-filtered counts of the number of stimuli A and B observed up to trial , i.e.,

In other words, the solution to the constrained inference problem, with the precision cost, is similar to the Bayesian posterior (Equation 2), but with counts of the two stimuli that gradually ‘forget’ remote observations (in the absence of a cost, that is, , we have and , and thus we recover the Bayesian posterior). As a result, these counts fluctuate with the recent history of the stimuli. Consequently, the posterior is dominated by the recent stimuli: it does not converge, but instead fluctuates with the recent stimulus history (Figure 3c and d, purple lines; compare with the green and gray lines). Hence, this model implies predictions about subsequent stimuli that depend on the stimulus history, i.e., it predicts sequential effects.

Unpredictability cost

A different hypothesis is that the subjects favor, in their inference, parameter vectors that correspond to more predictable outcomes. We quantify the outcome unpredictability by the Shannon entropy (Shannon, 1948) of the outcome implied by the vector of parameters , which we denote by . (In the Bernoulli-observer case, ; for the Markov-observer cases, see Methods.) The cost associated with the distribution is the expectation of this quantity averaged over beliefs, i.e.,

which we call the ‘unpredictability cost’. For a Bernoulli observer, a posterior concentrated on extreme values of the Bernoulli parameter (toward 0 or 1), thus representing more predictable environments, comes with a lower cost than a posterior concentrated on values of the Bernoulli parameter close to 0.5, which correspond to the most unpredictable environments (Figure 3a).

After trials, the loss function (Equation 1) under this cost is minimized by the posterior

i.e., the product of the Bayesian posterior, which narrows with around the stimulus generative probability, and of a function that is larger for values of that imply less entropic (i.e. more predictable) environments (see Methods). In short, with the unpredictability cost the model subject’s posterior is ‘pushed’ towards less entropic values of .

In the Bernoulli case (), the posterior after stimuli has a global maximum, , that depends on the proportion of stimuli A observed up to trial . As the number of presented stimuli grows, the posterior becomes concentrated around this maximum. The proportion naturally converges to the stimulus generative probability, , thus our subject’s inference converges towards the value which is different from the true value , in the non-equiprobable case (). The equiprobable case () is singular, in that with a weak cost () the inferred probability is unbiased (), while with a strong cost () the inferred probability does not converge but instead alternates between two values above and below 0.5; see Prat-Carrabin et al., 2021a. In other words, except in the equiprobable case, the inference converges but it is biased, i.e., the posterior peaks at an incorrect value of the stimulus generative probability (Figure 3c and d, green lines). This value is closer to the extremes (0 and 1) than the stimulus generative probability, that is, it implies an environment more predictable than the actual one (Figure 3d).

In the case of a Markov observer (), the posterior also converges to a vector of parameters which implies not only a bias but also that the conditional probabilities of a stimulus A (conditioned on different stimulus histories) are not equal. The prediction of the next stimulus being A on a given trial depends on whether the preceding stimulus was A or B: this model therefore predicts sequential effects. We further examine below the behavior of this model in the cases of a Bernoulli observer and of different Markov observers. We refer the reader interested in more details on the Markov models, including their mathematical derivations, to the Methods section.

In short, with the unpredictability-cost models, when , the inference process converges to an asymptotic posterior which does not itself depend on the history of the stimulus, but that is biased (Figure 3c, d, green lines). In particular, for Markov observers (), the asymptotic posterior corresponds to an erroneous belief about the dependency of the stimulus on the recent stimulus history, which results in sequential effects in behavior.

Overview of the inference models

Although the two families of models derived from the two costs both potentially generate sequential effects, they do so by giving rise to qualitatively different inference processes. Under the unpredictability cost, the inference converges to a posterior that, in the Bernoulli case (), implies a biased estimate of the stimulus generative probability (Figure 3d, green lines), while in the Markov case () it implies the belief that there are serial dependencies in the stimuli: predictions therefore depend on the recent stimulus history. By contrast, the precision cost prevents beliefs from converging (Figure 3c, purple lines). As a result, the subject’s predictions vary with the recent stimulus history (Figure 3d). This inference process amounts to an exponential discount of remote observations, or equivalently, to the overweighting of recent observations (Equation 6).

To investigate in more detail the sequential effects that these two costs produce, we implement two families of inference models derived from the two costs. Each model is characterized by the type of cost (unpredictability cost or precision cost), and by the assumed Markov order (): we examine the case of a Bernoulli observer () and three cases of Markov observers (with 1, 2, and 3). We thus obtain models of inference. Each of these models has one parameter controlling the weight of the cost. (We also examine a ‘hybrid’ model that combines the two costs; see below.)

Response-selection strategy

We assume that the subject’s response on a given trial depends on the inferred posterior according to a generalization of ‘probability matching’ implemented in other studies (Battaglia et al., 2011; Yu and Huang, 2014; Prat-Carrabin et al., 2021b). In this response-selection strategy, the subject predicts A with the probability , where is the expected probability of a stimulus A derived from the posterior, i.e., . The single parameter controls the randomness of the response: with the subject predicts A and B with equal probability; with the response-selection strategy corresponds to probability matching, that is, the subject predicts A with probability ; and as increases toward infinity the choices become optimal, that is, the subjects predicts A if the expected probability of observing a stimulus A, , is greater than 0.5, and predicts B if it is lower than 0.5 (if the subject chooses A or B with equal probability). In our investigations, we also implement several other response-selection strategies, including one in which subjects have a propensity to repeat their preceding response, or conversely, to alternate; these analyses do not change our conclusions (see Methods).

Model fitting favors Markov-observer models

Each of our eight models has two parameters: the factor weighting the cost, , and the exponent of the generalized probability-matching, . We fit the parameters of each model to the responses of each subject, by maximizing their likelihoods. We find that 60% of subjects are best fitted by one of the unpredictability-cost models, while 40% are best fitted by one of the precision-cost models. When pooling the two types of cost, 65% of subjects are best fitted by a Markov-observer model. We implement a ‘Bayesian model selection’ procedure (Stephan et al., 2009), which takes into account, for each subject, the likelihoods of all the models (and not only the maximum among them) in order to obtain a Bayesian posterior over the distribution of models in the general population (see Methods). The derived expected probability of unpredictability-cost models is 57% (and 43% for precision-cost models) with an exceedance probability (i.e. probability that unpredictability-cost models are more frequent in the general population) of 78%. The expected probability of Markov-observer models, regardless of the cost used in the model, is 70% (and 30% for Bernoulli-observer models) with an exceedance probability (i.e. probability that Markov-observer models are more frequent in the general population) of 98%. These results indicate that the responses of subjects are generally consistent with a Markov-observer model, although the stimuli used in the experiment are Bernoulli-distributed. As for the unpredictability-cost and the precision-cost families of models, Bayesian model selection does not provide decisive evidence in favor of either model, indicating that they both capture some aspects of the responses of the subjects. Below, we examine more closely the behaviors of the models, and point to qualitative differences between the predictions resulting from each model family.

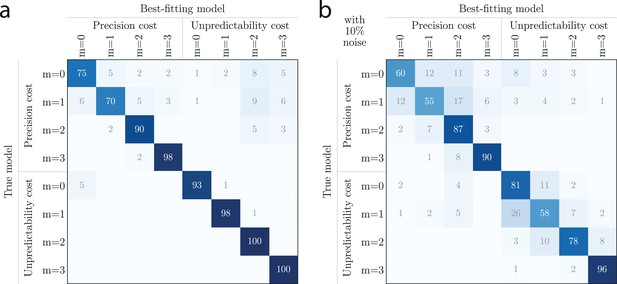

Before turning to these results, we validate the robustness of our model-fitting procedure with several additional analyses. First, we estimate a confusion matrix to examine the possibility that the model-fitting procedure could misidentify the models which generated test sets of responses. We find that the best-fitting model corresponds to the true model in at least 70% of simulations (the chance level is 12.5%=1/8 models), and actually more than 90% for the majority of models (see Appendix).

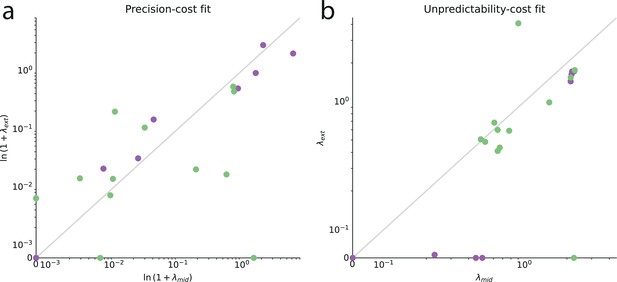

Second, we seek to verify whether the best-fitting cost factor, , that we obtain for each subject is consistent across the range of probabilities tested. Specifically, we fit separately the models to the responses obtained in the blocks of trials whose stimulus generative probability was ‘medium’ (between 0.3 and 0.7, included) on the one hand, and to the responses obtained when the probability was ‘extreme’ (below 0.3, and above 0.7) on the other hand; and we compare the values of the best-fitting cost factors in these two cases. More precisely, for the precision-cost family, we look at the inverse of the decay time, , which is the inverse of the characteristic time over which the model subject ‘forgets’ past observations. With both families of models, we find that on a logarithmic scale the parameters in the medium- and extreme-probabilities cases are significantly correlated across subjects (Pearson’s , precision-cost models: 0.75, p-value: 1e-4; unpredictability-cost models: , p-value: .036). In other words, if a subject is best fitted by a large cost factor in medium-probabilities trials, he or she is likely to be also best fitted by a large cost factor in extreme-probabilities trials. This indicates that our models capture idiosyncratic features of subjects that generalize across conditions instead of varying with the stimulus probability (see Appendix).

Third, as mentioned above we examine a variant of the response-selection strategy in which the subject sometimes repeats the preceding response, or conversely alternates and chooses the other response, instead of responding based on the inferred probability of the next stimulus. This propensity to repeat or alternate does not change the best-fitting inference model of most subjects, and the best-fitting values of the parameters and are very stable when allowing or not for this propensity. This analysis supports the results we present here, and speaks to the robustness of the model-fitting procedure (see Methods).

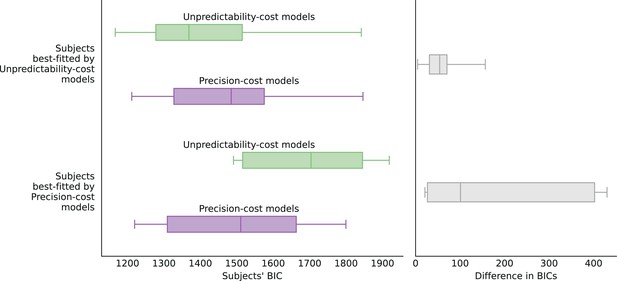

Finally, as the unpredictability-cost family and the precision-cost family of models both seem to capture the responses of a sizable share of the subjects, one might assume that the behavior of most subjects actually fall ‘somewhere in between’, and would be best accounted for by a hybrid model combining the two costs. In our investigations, we have implemented such a model, whereby the subject’s approximate posterior results from the minimization of a loss function that includes both a precision cost, with weight , and an unpredictability cost, with weight (and the response-selection strategy is the generalized probability matching, with parameter ). We do not find that most subjects’ responses are better fitted (as measured by the Bayesian Information Criterion Schwarz, 1978) by a combination of the two costs: instead, for more than two thirds of subjects, the best-fitting model features just one cost (see Methods). In other words, the two cost seems to capture different aspects of the behavior that are predominant in different subpopulations. Below, we examine the behavioral patterns resulting from each cost type, in comparison with the behavior of the subjects.

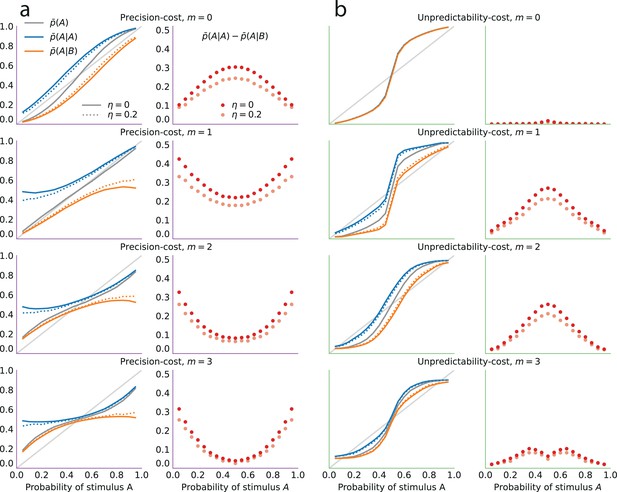

Models of costly inference reproduce the attractive effect of the most recent stimulus

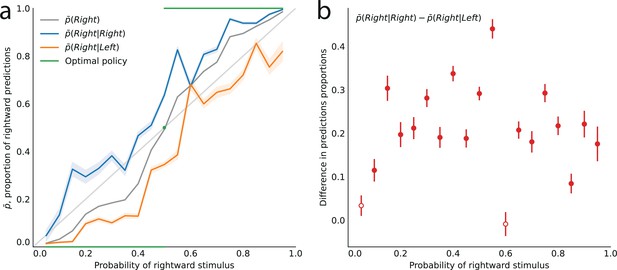

We now examine the behavioral patterns resulting from the models. All the models we consider predict that the proportion of predictions A, , is a smooth, increasing function of the stimulus generative probability (when and ; Figure 4a–d, grey lines), thus we focus, here, on the ability of the models to reproduce the subjects’ sequential effects. With the unpredictability-cost model of a Bernoulli observer (), the belief of the model subject, as mentioned above, asymptotically converges in non-equiprobable cases to an erroneous value of the stimulus generative probability (Figure 3d, green lines). After a large number of observations (such as the 200 ‘passive’ trials, in our task), the sensitivity of the belief to new observations becomes almost imperceptible; as a result, this model predicts practically no sequential effects (Figure 4b), that is, . With the unpredictability-cost model of a Markov observer (e.g. ), the belief of the model subject also converges, but to a vector of parameters that implies a sequential dependency in the stimulus, that is, , resulting in sequential effects in predictions, that is, . The parameter vector yields a more predictable (less entropic) environment if the probability conditional on the more frequent outcome (say, A) is less entropic than the probability conditional on the less frequent outcome (B). This is the case if the former is greater than the latter, resulting in the inequality , that is, in sequential effects of the attractive kind (Figure 4d). (The case in which B is the more frequent outcome results in the inequality , i.e., , i.e., the same, attractive sequential effects.)

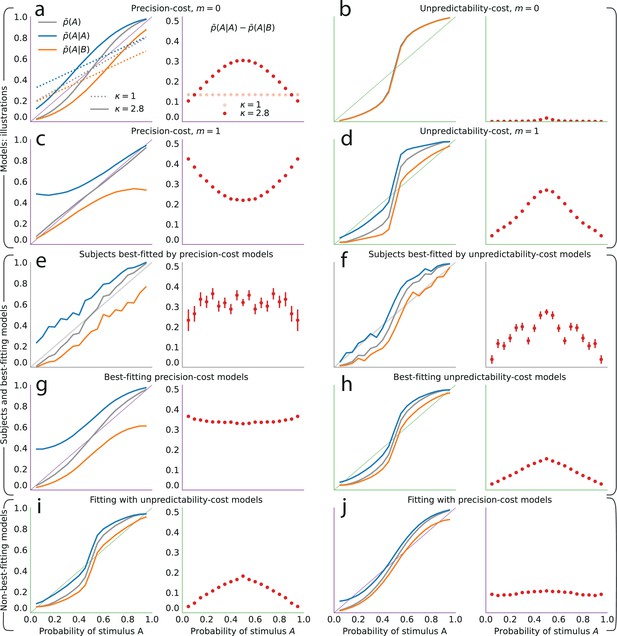

The precision-cost and unpredictability-cost models reproduce the subjects’ attractive sequential effects.

(a–h) Left subpanels: proportion of predictions A as a function of the stimulus generative probability, conditional on observing A (blue line) or B (orange line) on the preceding trial, and unconditional (grey line). Right subpanels: difference between the proportion of predictions A conditional on observing A, and conditional on observing B, . In all panels this difference is positive, indicating an attractive sequential effect (i.e. observing A at the preceding trial increases the probability of predicting A). (a–d) Models with the precision cost (a,c) or the unpredictability cost (b,d), and with a Bernoulli observer (; a,b) or a Markov observer with (c,d). (a) Behavior with the generalized probability-matching response-selection strategy with (solid lines, red dots) and with (dotted lines, light-red dots). (e,f) Pooled responses of the subjects best-fitted by a precision-cost model (e) or by an unpredictability-cost model (f). Right subpanels: bars are twice the square root of the sum of the two squared standard errors of the means (for each point, total n: e: 1393, f: 2189 to 2388). (g,h) Pooled responses of the models that best fit the corresponding subjects in panels (e,f). (i,j) Pooled responses of the unpredictability-cost models (i) and of the precision-cost models (j), fitted to the subjects best-fitted, respectively, by precision-cost models, and by unpredictability-cost models.

Turning to the precision-cost models, we have noted that in these models the posterior fluctuates with the recent history of the stimuli (Figure 3c): as a result, sequential effects are obtained, even with a Bernoulli observer (; Figure 4a). The most recent stimulus has the largest weight in the exponentially filtered counts that determine the posterior (Equation 6), thus the model subject’s prediction is biased towards the last stimulus, that is, the sequential effect is attractive (). With the traditional probability-matching response-selection strategy (i.e. ), the strength of the attractive effect is the same across all stimulus generative probabilities (i.e. the difference is constant; Figure 4a, dotted lines and light-red dots). With the generalized probability-matching response-selection strategy, if , proportions below and above 0.5 are brought closer to the extremes (0 and 1, respectively), resulting in larger sequential effects for values of the stimulus generative probability closer to 0.5 (Figure 4a, solid lines and red dots; the model is simulated with , a value representative of the subjects’ best-fitting values for this parameter). We also find stronger sequential effects closer to the equiprobable case in subjects’ data (Figure 2b).

The precision-cost model of a Markov observer () also predicts attractive sequential effects (Figure 4c). While the behavior of the Bernoulli observer (with a precision cost) is determined by two exponentially-filtered counts of the two possible stimuli (Equation 6), that of the Markov observer with depends on four exponentially filtered counts of the four possible pairs of stimuli. After observing a stimulus B, the belief that the following stimulus should be A or B is determined by the exponentially filtered counts of the pairs BA and BB. If is large, i.e., if the stimulus B is infrequent, then the BA and BB pairs are also infrequent and the corresponding counts are close to zero: the model subject thus behaves as if only very little evidence had been observed about the transitions B to A and B to B in this case, resulting in a proportion of predictions A conditional on a preceding B, , close to 0.5 (Figure 4c, orange line). Consequently, the sequential effects are stronger for values of the stimulus generative probabilities closer to the extreme (Figure 4c, red dots).

Both families of costs are thus able to produce attractive sequential effects, albeit with some qualitative differences. (In Figure 4a–d we show the behaviors resulting from the two costs for a Bernoulli observer and a Markov observer of order ; the Markov observers of higher order exhibit qualitatively similar behaviors; see Methods.) As the model fitting indicates that different groups of subjects are best fitted by models belonging to the two families, we examine separately the behaviors of the subjects whose responses are best fitted by each of the two costs (Figure 4e and f), in comparison with the behaviors of the corresponding best-fitting models (Figure 4g and h). This provides a finer understanding of the behavior of subjects than the group average shown in Figure 2. For the subjects best fitted by precision-cost models, the proportion of predictions A, , when the stimulus generative probability is close to 0.5, is a less steep function of this probability than for the subjects best-fitted by unpredictability-cost models (Figure 4e and f, grey lines); furthermore, their sequential effects are larger (as measured by the difference ), and do not depend much on the stimulus generative probability (Figure 4e and f, red dots). The corresponding models reproduce the behavioral patterns of the subjects that they best fit (Figure 4g and h). Each family of models seems to capture specific behaviors exhibited by the subjects: when fitting the unpredictability-cost models to the responses of the subjects that are best fitted by precision-cost models, and conversely when fitting the precision-cost models to the responses of the subjects that are best fitted by unpredictability-cost models, the models do not reproduce well the subjects’ behavioral patterns (Figure 4i and j). The precision-cost models, however, seem slightly better than the unpredictability-cost models at capturing the behavior of the subjects that they do not best fit (Figure 4, compare panel j to panel f, and panel i to panel e). Substantiating this observation, the examination of the distributions of the models’ BICs across subjects shows that when fitting the models onto the subjects that they do not best fit, the precision-cost models fare better than the unpredictability-cost models (see Appendix).

Beyond the most recent stimulus: patterns of higher-order sequential effects

Notwithstanding the quantitative differences just presented, both families of models yield qualitatively similar attractive sequential effects: the model subjects’ predictions are biased towards the preceding stimulus. Does this pattern also apply to the longer history of the stimulus, i.e., do more distant trials also influence the model subjects’ predictions? To investigate this hypothesis, we examine the difference between the proportion of predictions A after observing a sequence of length that starts with A, minus the proportion of predictions A after the same sequence, but starting with B, i.e., , where is a sequence of length , and and denote the same sequence preceded by A and by B. This quantity enables us to isolate the influence of the -to-last stimulus on the current prediction. If the difference is positive, the effect is ‘attractive’; if it is negative, the effect is ‘repulsive’ (in this latter case, the presentation of an A decreases the probability that the subjects predicts A in a later trial, as compared to the presentation of a B); and if the difference is zero there is no sequential effect stemming from the -to-last stimulus. The case corresponds to the immediately preceding stimulus, whose effect we have shown to be attractive, i.e., , in the responses both of the best-fitting models and of the subjects (Figures 2b, 4g and h).

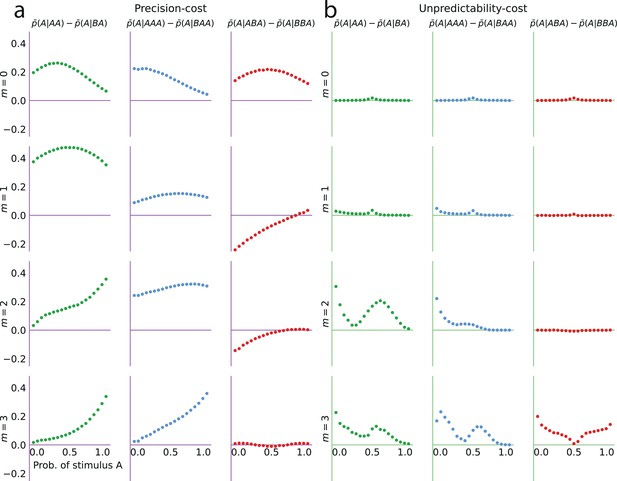

We investigate the effect of the -to-last stimulus on the behavior of the two families of models, with , , and . We present here the main results of this investigation; we refer the reader to Methods for a more detailed analysis. With unpredictability-cost models of Markov order , there are non-vanishing sequential effects stemming from the -to-last stimulus only if the Markov order is greater than or equal to the distance from this stimulus to the current trial, i.e., if . In this case, the sequential effects are attractive (Figure 5).

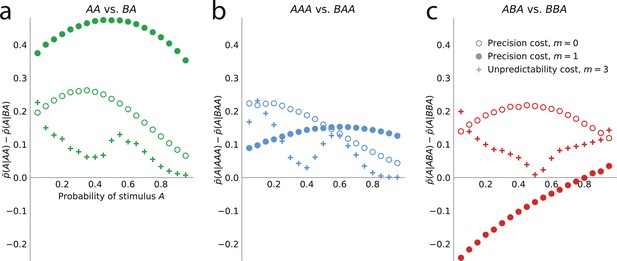

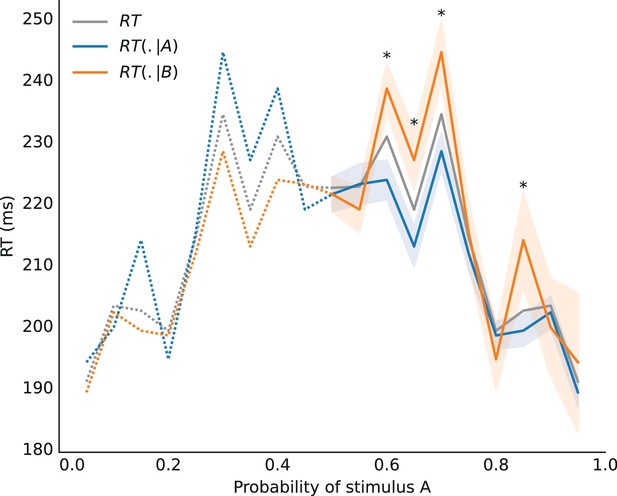

Higher-order sequential effects: the precision-cost model of a Markov observer predicts a repulsive effect of the third-to-last stimulus.

Sequential effect of the second-to-last (a) and third-to-last (b,c) stimuli, in the responses of the precision-cost model of a Bernoulli observer (; white circles), of the precision-cost model of a Markov observer with (filled circles), and of the unpredictability-cost model of a Markov observer with (crosses). (a) Difference between the proportion of prediction A conditional on observing AA, and conditional on observing BA, i.e., , as a function of the stimulus generative probability. With the three models, this difference is positive, indicating an attractive sequential effect of the second-to-last stimulus. (b) Difference between the proportion of prediction A conditional on observing AAA, and conditional on observing BAA, i.e., . The positive difference indicates an attractive sequential effect of the third-to-last stimulus in this case. (c) Difference between the proportion of prediction A conditional on observing ABA, and conditional on observing BBA, i.e., . With the precision-cost model of a Markov observer, the negative difference when the stimulus generative probability is lower than 0.8 indicates a repulsive sequential effect of the third-to-last stimulus in this case, while when the probability is greater than 0.8, and with the predictability-cost model of a Bernoulli observer and with the unpredictability-cost model of a Markov observer, the positive difference indicates an attractive sequential effect of the third-to-last stimulus.

With precision-cost models, the -to-last stimuli yield non-vanishing sequential effects regardless of the Markov order, . With , the effect is attractive, i.e., . With (second-to-last stimulus), the effect is also attractive, i.e., in the case of the pair of sequences AA and BA, (Figure 5a). By symmetry, the difference is also positive for the other pair of relevant sequences, AB and BB (e.g. we note that , and that when the probability of A is is equal to when the probability of A is . We detail in Methods such relations between the proportions of predictions A or B in different situations. These relations result in the symmetries of Figure 2, for the sequential effect of the last stimulus, while for higher-order sequential effects they imply that we do not need to show, in Figure 5, the effects following all possible past sequences of two or three stimuli, as the ones we do not show are readily derived from the ones we do.)

As for the third-to-last stimulus (), it can be followed by four different sequences of length two, but we only need to examine two of these four, for the reasons just presented. We find that for the precision-cost models, with all the Markov orders we examine (from 0 to 3), the probability of predicting A after observing the sequence AAA is greater than that after observing the sequence BAA, i.e., , that is, there is an attractive sequential effect of the third-to-last stimulus if the sequence following it is AA (and, by symmetry, if it is BB; Figure 5b). So far, thus, we have found only attractive effects. However, the results are less straightforward when the third-to-last stimulus is followed by the sequence BA. In this case, for a Bernoulli observer (), the effect is also attractive: (Figure 5c, white circles). With Markov observers (), over a range of stimulus generative probability , the effect is repulsive: , that is, the presentation of an A decreases the probability that the model subject predicts A three trials later, as compared to the presentation of a B (Figure 5c, filled circles). The occurrence of the repulsive effect in this particular case is a distinctive trait of the precision-cost models of Markov observers (); we do not obtain any repulsive effect with any of the unpredictability-cost models, nor with the precision-cost model of a Bernoulli observer ().

Subjects’ predictions exhibit higher-order repulsive effects

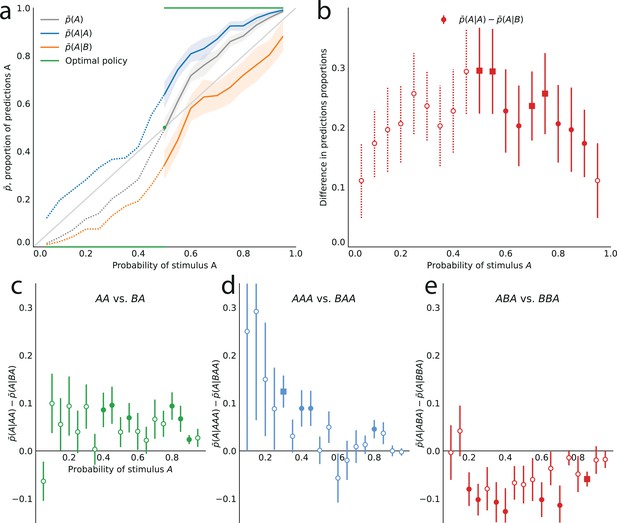

We now examine the sequential effects in subjects’ responses, beyond the attractive effect of the preceding stimulus (; discussed above). With (second-to-last stimulus), for the majority of the 19 stimulus generative probabilities , we find attractive sequential effects: the difference is significantly positive (Figure 6a; p-values <0.01 for 11 stimulus generative probabilities, <0.05 for 13 probabilities; subjects pooled). With (third-to-last stimulus), we also find significant attractive sequential effects in subjects’ responses for some of the stimulus generative probabilities, when the third-to-last stimulus is followed by the sequence AA (Figure 6b; p-values <0.01 for four probabilities, <0.05 for seven probabilities). When it is instead followed by the sequence BA, we find that for eight stimulus generative probabilities, all between 0.25 and 0.75, there is a significant repulsive sequential effect: (p-values <0.01 for six probabilities, <0.05 for eight probabilities; subjects pooled). Thus, in these cases, the occurrence of A as the third-to-last stimulus increases (in comparison with the occurrence of a B) the proportion of the opposite prediction, B. For the remaining stimulus generative probabilities, this difference is in most cases also negative although not significantly different from zero (Figure 6c). (An across-subjects analysis yields similar results; see Supplementary Materials.) Figure 6d summarizes subjects’ sequential effects, and exhibits the attractive and repulsive sequential effects in their responses (compare solid and dotted lines). (In this tree-like representation, we show averages across the stimulus generative probabilities; a figure with the individual ‘trees’ for each probability is provided in the Appendix.)

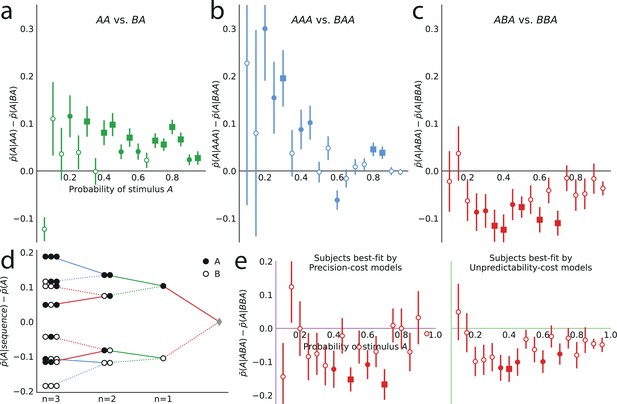

Patterns of attractive and repulsive sequential effects in subjects’ responses.

(a) Difference between the proportion of prediction A conditional on observing the sequence AA, and conditional on observing BA, i.e., , as a function of the stimulus generative probability. This difference is in most cases positive, indicating an attractive sequential effect of the second-to-last stimulus. (b) Difference between the proportion of prediction A conditional on observing AAA, and conditional on observing BAA, i.e., . This difference is positive in most cases, indicating an attractive sequential effect of the third-to-last stimulus. (c) Difference between the proportion of prediction A conditional on observing ABA, and conditional on observing BBA, i.e., . This difference is negative in most cases, indicating a repulsive sequential effect of the third-to-last stimulus. (d) Differences, averaged over all tested stimulus generative probabilities, between the proportion of predictions A conditional on sequences of up to three past observations, minus the unconditional proportion. The proportion conditional on a sequence is an average of the two proportions conditional on the same sequence preceded by another, ‘older’ observation, A or B, resulting in a binary-tree structure in this representation. If this additional past observation is A (respectively, B), we connect the two sequences with a solid line (respectively, a dotted line). In most cases, conditioning on an additional A increases the proportion of predictions A (in comparison to conditioning on an additional B), indicating an attractive sequential effect, except when the additional observation precedes the sequence BA (or its symmetric AB), in which cases repulsive sequential effects are observed (dotted line ‘above’ solid line). (e) Same as (c), with subjects split in two groups: the subjects best-fitted by precision-cost models (left) and the subjects best-fitted by unpredictability-cost models (right). In panels a-c and e, the filled circles indicate that the p-value of the Fisher exact test is below 0.05, and the filled squares indicate that the p-value with Bonferroni-Holm-Šidák correction is below 0.05. Bars are twice the square root of the sum of the two squared standard errors of the means (for each point, total n: a: 178 to 3584, b: 37 to 3394, c: 171 to 1868, e: 63 to 1184). In all panels, the responses of all the subjects are pooled together.

The repulsive sequential effect of the third-to-last stimulus in subjects’ predictions only occurs when the third-to-last stimulus is A followed by the sequence BA. It is also only in this case that the repulsive effect appears with the precision-cost models of a Markov observer (while it never appears with the unpredictability-cost models). This qualitative difference suggests that the precision-cost models offer a better account of sequential effects in subjects. However, model-fitting onto the overall behavior presented above showed that a fraction of the subjects is better fitted by the unpredictability-cost models. We investigate, thus, the presence of a repulsive effect in the predictions of the subjects best fitted by the precision-cost models, and of those best fitted by the unpredictability-cost models. For the subjects best fitted by the precision-cost models, we find (expectedly) that there is a significant repulsive sequential effect of the third-to-last stimulus (; p-values <0.01 for two probabilities, <0.05 for four probabilities; subjects pooled; Figure 6e, left panel). For the subjects best fitted by the unpredictability-cost models (a family of model that does not predict any repulsive sequential effects), we also find, perhaps surprisingly, a significant repulsive effect of the third-to-last stimulus (p-values <0.01 for three probabilities, <0.05 for five probabilities; subjects pooled), which demonstrates the robustness of this effect (Figure 6e, right panel). Thus, in spite of the results of the model-selection procedure, some sequential effects in subjects’ predictions support only one of the two families of model. Regardless of the model that best fits their overall predictions, the behavior of the subjects is consistent only with the precision-cost family of models with Markov order equal to or greater than 1, that is, with a model of inference of conditional probabilities hampered by a cognitive cost weighing on the precision of belief distributions.

Discussion

We investigated the hypothesis that sequential effects in human predictions result from cognitive constraints hindering the inference process carried out by the brain. We devised a framework of constrained inference, in which the model subject bears a cognitive cost when updating its belief distribution upon the arrival of new evidence: the larger the cost, the more the subject’s posterior differs from the Bayesian posterior. The models we derive from this framework make specific predictions. First, the proportion of forced-choice predictions for a given stimulus should increase with the stimulus generative probability. Second, most of those models predict sequential effects: predictions also depend on the recent stimulus history. Models with different types of cognitive cost resulted in different patterns of attractive and repulsive effects of the past few stimuli on predictions. To compare the predictions of constrained inference with human behavior, we asked subjects to predict each next outcome in sequences of binary stimuli. We manipulated the stimulus generative probability in blocks of trials, exploring exhaustively the probability range from 0.05 to 0.95 by increments of 0.05. We found that subjects’ predictions depend on both the stimulus generative probability and the recent stimulus history. Sequential effects exhibited both attractive and repulsive components which were modulated by the stimulus generative probability. This behavior was qualitatively accounted for by a model of constrained inference in which the subject infers the transition probabilities underlying the sequences of stimuli and bears a cost that increases with the precision of the posterior distributions. Our study proposes a novel theoretical account of sequential effects in terms of optimal inference under cognitive constraints and it uncovers the richness of human behavior over a wide range of stimulus generative probabilities.

The notion that human decisions can be understood as resulting from a constrained optimization has gained traction across several fields, including neuroscience, cognitive science, and economics. In neuroscience, a voluminous literature that started with Attneave, 1954 and Barlow, 1961 investigates the idea that perception maximizes the transmission of information, under the constraint of costly and limited neural resources (Laughlin, 1981; Laughlin et al., 1998; Simoncelli and Olshausen, 2001); related theories of ‘efficient coding’ account for the bias and the variability of perception (Ganguli and Simoncelli, 2016; Wei and Stocker, 2015; Wei and Stocker, 2017; Prat-Carrabin and Woodford, 2021c). In cognitive science and economics, ‘bounded rationality’ is a precursory concept introduced in the 1950s by Herbert Simon, who defines it as “rational choice that takes into account the cognitive limitations of the decision maker — limitations of both knowledge and computational capacity” (Simon, 1997). For Gigerenzer, these limitations promote the use of heuristics, which are ‘fast and frugal’ ways of reasoning, leading to biases and errors in humans and other animals (Gigerenzer and Goldstein, 1996; Gigerenzer and Selten, 2002). A range of more recent approaches can be understood as attempts to specify formally the limitations in question, and the resulting trade-off. The ‘resource-rational analysis’ paradigm aims at a unified theoretical account that reconciles principles of rationality with realistic constraints about the resources available to the brain when it is carrying out computations (Griffiths et al., 2015). In this approach, biases result from the constraints on resources, rather than from ‘simple heuristics’ (see Lieder and Griffiths, 2019 for an extensive review). For instance, in economics, theories of ‘rational inattention’ propose that economic agents optimally allocate resources (a limited amount of attention) to make decisions, thereby proposing new accounts of empirical findings in the economic literature (Sims, 2003; Woodford, 2009; Caplin et al., 2019; Gabaix, 2017; Azeredo da Silveira and Woodford, 2019; Azeredo da Silveira et al., 2020).

Our study puts forward a ‘resource-rational’ account of sequential effects. Traditional accounts since the 1960s attribute these effects to a belief in sequential dependencies between successive outcomes (Edwards, 1961; Matthews and Sanders, 1984) (potentially ‘acquired through life experience’ Ayton and Fischer, 2004), and more generally to the incorrect models that people assume about the processes generating sequences of events (see Oskarsson et al., 2009 for a review; similar rationales have been proposed to account for suboptimal behavior in other contexts, for example in exploration-exploitation tasks Navarro et al., 2016). This traditional account was formalized, in particular, by models in which subjects carry out a statistical inference about the sequence of stimuli presented to them, and this inference assumes that the parameters underlying the generating process are subject to changes (Yu and Cohen, 2008; Wilder et al., 2009; Zhang et al., 2014; Meyniel et al., 2016). In these models, sequential effects are thus understood as resulting from a rational adaptation to a changing world. Human subjects indeed dynamically adapt their learning rate when the environment changes (Payzan-LeNestour et al., 2013; Meyniel and Dehaene, 2017; Nassar et al., 2010), and they can even adapt their inference to the statistics of these changes (Behrens et al., 2007; Prat-Carrabin et al., 2021b). However, in our task and in many previous studies in which sequential effects have been reported, the underlying statistics are in fact not changing across trials. The models just mentioned thus leave unexplained why subjects’ behavior, in these tasks, is not rationally adapted to the unchanging statistics of the stimulus.

What underpins our main hypothesis is a different kind of rational adaptation: one, instead, to the ‘cognitive limitations of the decision maker’, which we assume hinder the inference carried out by the brain. We show that rational models of inference under a cost yield rich patterns of sequential effects. When the cost varies with the precision of the posterior (measured here by the negative of its entropy, Equation 3), the resulting optimal posterior is proportional to the product of the prior and the likelihood, each raised to an exponent (Equation 4). Many previous studies on biased belief updating have proposed models that adopt the same form except for the different exponents applied to the prior and to the likelihood (Grether, 1980; Matsumori et al., 2018; Benjamin, 2019). Here, with the precision cost, both quantities are raised to the same exponent and we note that in this case the inference of the subject amounts to an exponentially decaying count of the patterns observed in the sequence of stimuli, which is sometimes called ‘leaky integration’ in the literature (Yu and Cohen, 2008; Wilder et al., 2009; Jones et al., 2013; Meyniel et al., 2016). The models mentioned above, that posit a belief in changing statistics, indeed are well approximated by models of leaky integration (Yu and Cohen, 2008; Meyniel et al., 2016), which shows that the exponential discount can have different origins. Meyniel et al., 2016 show that the precision-cost, Markov-observer model with (named ‘local transition probability model’ in this study) accounts for a range of other findings, in addition to sequential effects, such as biases in the perception of randomness and patterns in the surprise signals recorded through EEG and fMRI. Here we reinterpret these effects as resulting from an optimal inference subject to a cost, rather than from a suboptimal erroneous belief in the dynamics of the stimulus’ statistics. In our modeling approach, the minimization of a loss function (Equation 1) formalizes a trade-off between the distance to optimality of the inference, and the cognitive constraints under which it is carried out. We stress that our proposal is not that the brain actively solves this optimization problem online, but instead that it is endowed with an inference algorithm (whose origin remains to be elucidated) which is effectively a solution to the constrained optimization problem.

By grounding the sequential effects in the optimal solution to a problem of constrained optimization, our approach opens avenues for exploring the origins of sequential effects, in the form of hypotheses about the nature of the constraint that hinders the inference carried out by the brain. With the precision cost, more precise posterior distributions are assumed to take a larger cognitive toll. The intuitive assumption that it is costly to be precise finds a more concrete realization in neural models of inference with probabilistic population codes: in these models, the precision of the posterior is proportional to the average activity of the population of neurons and to the number of neurons (Ma et al., 2006; Seung and Sompolinsky, 1993). More neural activity and more neurons arguably come with a metabolic cost, and thus more precise posteriors are more costly in these models. Imprecisions in computations, moreover, was shown to successfully account for decision variability and adaptive behavior in volatile environments (Findling et al., 2019; Findling et al., 2021).

The unpredictability cost, which we introduce, yields models that also exhibit sequential effects (for Markov observers), and that fit several subjects better than the precision-cost models. The unpredictability cost relies on a different hypothesis: that the cost of representing a distribution over different possible states of the world (here, different possible values of ) resides in the difficulty of representing these states. This could be the case, for instance, under the hypothesis that the brain runs stochastic simulations of the implied environments, as proposed in models of ‘intuitive physics’ (Battaglia et al., 2013) and in Kahneman and Tversky’s ‘simulation heuristics’ (Kahneman et al., 1982). More entropic environments imply more possible scenarios to simulate, giving rise, under this assumption, to higher costs. A different literature explores the hypothesis that the brain carries out a mental compression of sequences (Simon, 1972; Chekaf et al., 2016; Planton et al., 2021); entropy in this context is a measure of the degree of compressibility of a sequence (Planton et al., 2021), and thus, presumably, of its implied cost. As a result, the brain may prefer predictable environments over unpredictable ones. Human subjects exhibit a preference for predictive information indeed (Ogawa and Watanabe, 2011; Trapp et al., 2015), while unpredictable stimuli have been shown not only to increase anxiety-like behavior (Herry et al., 2007), but also to induce more neural activity (Herry et al., 2007; den Ouden et al., 2009; Alink et al., 2010) — a presumably costly increase, which may result from the encoding of larger prediction errors (Herry et al., 2007; Schultz and Dickinson, 2000).

We note that both costs (precision and unpredictability) can predict sequential effects, even though neither carries ex ante an explicit assumption that presupposes the existence of sequential effects. They both reproduce the attractive recency effect of the last stimulus exhibited by the subjects. They make quantitatively different predictions (Figure 4); we also find this diversity of behaviors in subjects.

The precision cost, as mentioned above, yields leaky-integration models which can be summarized by a simple algorithm in which the observed patterns are counted with an exponential decay. The psychology and neuroscience literature proposes many similar ‘leaky integrators’ or ‘leaky accumulators’ models (Smith, 1995; Roe et al., 2001; Usher and McClelland, 2001; Cook and Maunsell, 2002; Wang, 2002; Sugrue et al., 2004; Bogacz et al., 2006; Kiani et al., 2008; Yu and Cohen, 2008; Gao et al., 2011; Tsetsos et al., 2012; Ossmy et al., 2013; Meyniel et al., 2016). In connectionist models of decision-making, for instance, decision units in abstract network models have activity levels that accumulate evidence received from input units, and which decay to zero in the absence of input (Roe et al., 2001; Usher and McClelland, 2001; Wang, 2002; Bogacz et al., 2006; Tsetsos et al., 2012). In other instances, perceptual evidence (Kiani et al., 2008; Gao et al., 2011; Ossmy et al., 2013) or counts of events (Sugrue et al., 2004; Yu and Cohen, 2008; Meyniel et al., 2016) are accumulated through an exponential temporal filter. In our approach, leaky integration is not an assumption about the mechanisms underpinning some cognitive process: instead, we find that it is an optimal strategy in the face of a cognitive cost weighing on the precision of beliefs. Although it is less clear whether the unpredictability-cost models lend themselves to a similar algorithmic simplification, they consist in a distortion of Bayesian inference, for which various neural-network models have been proposed (Deneve et al., 2001; Ma et al., 2008; Ganguli and Simoncelli, 2014; Echeveste et al., 2020).

Turning to the experimental results, we note that in spite of the rich literature on sequential effects, the majority of studies have focused on equiprobable Bernoulli environments, in which the two possible stimuli both had a probability equal to 0.5, as in tosses of a fair coin (Soetens et al., 1985; Cho et al., 2002; Yu and Cohen, 2008; Wilder et al., 2009; Jones et al., 2013; Zhang et al., 2014; Ayton and Fischer, 2004; Gökaydin and Ejova, 2017). In environments of this kind, the two stimuli play symmetric roles and all sequences of a given length are equally probable. In contrast, in biased environments one of the two possible stimuli is more probable than the other. Although much less studied, this situation breaks the regularities of equiprobable environments and is arguably very frequent in real life. In our experiment, we explore stimulus generative probabilities from 0.05 to 0.95, thus allowing to investigate the behavior of subjects in a wide spectrum of Bernoulli environments: from these with ‘extreme’ probabilities (e.g. p = 0.95) to these only slightly different from the equiprobable case (e.g. p = 0.55) to the equiprobable case itself (p = 0.5). The subjects are sensitive to the imbalance of the non-equiprobable cases: while they predict A in half the trials of the equiprobable case, a probability of just p = 0.55 suffices to prompt the subjects to predict A in about in 60% of trials, a significant difference (; sem: 0.008; p-value of t-test against null hypothesis that : 1.7e-11; subjects pooled).

The well-known ‘probability matching’ hypothesis (Herrnstein, 1961; Vulkan, 2000; Gaissmaier and Schooler, 2008) suggests that the proportion of predictions A matches the stimulus generative probability: . This hypothesis is not supported by our data. We find that in the non-equiprobable conditions these two quantities are significantly different (all p-values <1e-11, when ). More precisely, we find that the proportion of prediction A is more extreme than the stimulus generative probability (i.e. when , and when ; Figure 2a). This result is consistent with the observations made by Edwards, 1961; Edwards, 1956 and with the conclusions of a more recent review (Vulkan, 2000).

In addition to varying with the stimulus generative probability, the subjects’ predictions depend on the recent history of stimuli. Recency effects are common in the psychology literature; they were reported from memory (Ebbinghaus et al., 1913) to causal learning (Collins and Shanks, 2002) to inference (Shanteau, 1972; Hogarth and Einhorn, 1992; Benjamin, 2019). Recency effects, in many studies, are obtained in the context of reaction tasks, in which subjects must identify a stimulus and quickly provide a response (Hyman, 1953; Bertelson, 1965; Kornblum, 1967; Soetens et al., 1985; Cho et al., 2002; Yu and Cohen, 2008; Wilder et al., 2009; Jones et al., 2013; Zhang et al., 2014). Although our task is of a different kind (subjects must predict the next stimulus), we find some evidence of recency effects in the response times of subjects: after observing the less frequent of the two stimuli (when ), subjects seem slower at providing a response (see Appendix). In prediction tasks (like ours), both attractive recency effects, also called ‘hot-hand fallacy’, and repulsive recency effects, also called ‘gambler’s fallacy’, have been reported (Jarvik, 1951; Edwards, 1961; Ayton and Fischer, 2004; Burns and Corpus, 2004; Croson and Sundali, 2005; Oskarsson et al., 2009). The observation of both effects within the same experiment has been reported in a visual identification task (Chopin and Mamassian, 2012) and in risky choices (‘wavy recency effect’ Plonsky et al., 2015; Plonsky and Erev, 2017). As to the heterogeneity of these results, several explanations have been proposed; two important factors seem to be the perceived degree of randomness of the predicted variable and whether it relates to human performance (Ayton and Fischer, 2004; Burns and Corpus, 2004; Croson and Sundali, 2005; Oskarsson et al., 2009). In any event, most studies focus exclusively on the influence of ‘runs’ of identical outcomes on the upcoming prediction, for example, in our task, on whether three As in a row increases the proportion of predictions A. With this analysis, Edwards (Edwards, 1961) in a task similar to ours concluded to an attractive recency effect (which he called ‘probability following’). Although our results are consistent with this observation (in our data three As in a row do increase the proportion of predictions A), we provide a more detailed picture of the influence of each stimulus preceding the prediction, whether it is in a ‘run’ of identical stimuli or not, which allows us to exhibit the non-trivial finer structure of the recency effects that is often overlooked.